Auto H1 LLM Free, Freemium, Free trial, Subscription, Usage Based 11 AI 22

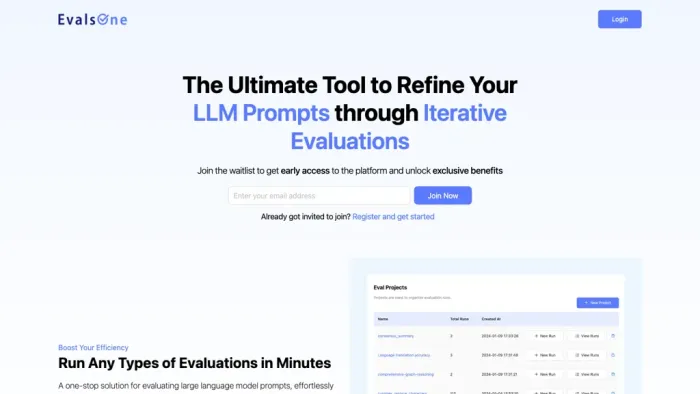

What is EvalsOne?

EvalsOne is the ultimate tool to refine LLM prompts through iterative evaluations. Join the waitlist to get early access to this platform and unlock exclusive benefits. With EvalsOne, you can boost efficiency by running all types of evaluations in just minutes.

It's a one-stop solution for evaluating large language model prompts, allowing you to effortlessly conduct tasks and obtain detailed assessment reports. The platform is applicable to various common evaluation scenarios such as dialogue generation, RAG evaluations, and agent assessments.

EvalsOne makes it easy to get started without the pains of preparing samples, offering multiple methods to easily prepare evaluation samples. Whether you want to evaluate public models from OpenAI, Anthropic, Google Gemini, Mistral, Microsoft Azure, or fine-tuned and self-hosted models, EvalsOne has got you covered.

KEY FEATURES

- ✔️ Refining LLM prompts through iterative evaluations.

- ✔️ Boosting efficiency by running all types of evaluations in minutes.

- ✔️ Evaluating large language model prompts effortlessly and obtaining detailed assessment reports.

- ✔️ Support for common evaluation scenarios such as dialogue generation, RAG evaluations, and agent assessments.

- ✔️ Offering over 100 built-in evaluation metrics and the ability to customize metrics to meet specific needs.

USE CASES

- Refine and improve large language model prompts efficiently by leveraging EvalsOne's iterative evaluations, saving time and effort in the evaluation process.

- Conduct detailed assessments for various evaluation scenarios such as dialogue generation, RAG evaluations, and agent assessments using EvalsOne's comprehensive evaluation platform.

- Easily evaluate a wide range of models from OpenAI, Anthropic, Google Gemini, Mistral, Microsoft Azure, or self-hosted models with EvalsOne's versatile evaluation methods and customizable metrics.