Auto H1 AI Assistant Free, Paid, Freemium, Free trial, Subscription, Waitlist, Contact 11 AI 22

What is Prompt Token Counter?

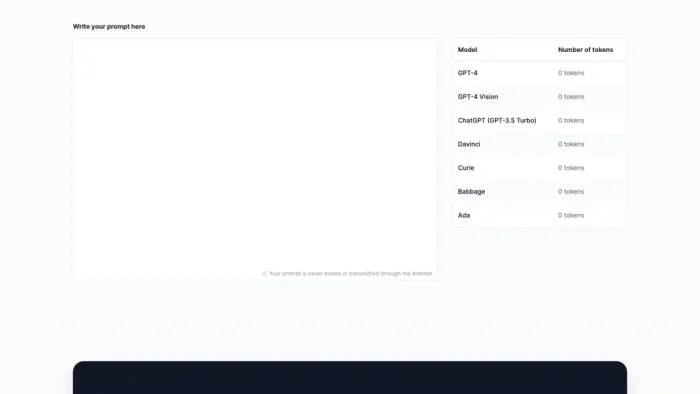

Token Counter for OpenAI Models

The Token Counter for OpenAI Models is an essential tool for working with language models like OpenAI's GPT-3.5 and ensuring interactions stay within token limits. By tracking and managing token usage in prompts and responses, users can optimize communication with the model, avoid exceeding token limits, manage costs effectively, and craft concise and effective prompts. The tool assists in pre-processing prompts, counting tokens, adjusting responses, and iteratively refining prompts to fit within the allowed token count. Understanding token limits, tokenizing prompts, and accounting for response tokens are key steps in efficiently managing interactions with OpenAI models.

Pricing:

Categories:

KEY FEATURES

- ✔️ Token tracking.

- ✔️ Token management.

- ✔️ Prompt pre-processing.

- ✔️ Token count adjustment.

- ✔️ Efficient interaction management.

USE CASES

- Ensure compliance with token limits when drafting queries for OpenAI's GPT-3.5 by utilizing the Token Counter tool to accurately track token usage and avoid exceeding limitations.

- Optimize costs by efficiently managing token usage in responses and adjusting prompts to stay within token constraints, leading to effective utilization of OpenAI models.

- Streamline the process of crafting concise and effective prompts for OpenAI models by iteratively refining prompts to fit within the allowed token count using the Token Counter tool.

No reviews yet